Update: I did a little more playing. Turns out, offset can be *very* competitive. Remember, the key is making sure your idle voltage doesn’t get so low that the system isn’t stable. If you can do that with offset, great. If you can’t (and want to get your load voltage down more), use a manual voltage.

Playing with iGPU overclocking yesterday got me interested in undervolting the CPU. Due to the gains I saw yesterday, I planned to up the frequency of the HD3000 even more.

However, before doing that, I wanted to get as much thermal headroom as possible on the i5 2500K. Hence, I started looking into undervolting.

Now unfortunately, info in this area (undervolting the i5 2500K) is sparse. As you can imagine, most people with the K series are using them to overclock, so searches were coming up empty.

Thus, it was time to do my own testing.

—

Default behavior

Important to have a rough idea as to what happens by default (when everything is left at stock speeds and auto voltage).

Essentially on my CPU:

- At idle, voltage drops to slightly under 1.0 V

- At load, voltage goes up to just under 1.4 V

Offset vs Manual behavior

Luckily, the difference here is very easy to understand. Note that this applies to the ASUS Z68 GENE-Z I’m using.

Manual – If you choose this in the BIOS, the voltage you set is always applied. If you set 1.2V, you’ll get 1.2V at idle, and 1.2V under load.

Offset – This modifies the default (Auto) behavior. As I mentioned above, by default I was getting just under 1.0V idle and just under 1.4V during load. For the sake of simplicity, let’s pretend I was getting *exactly* those numbers. If I were to set the Offset to -0.1v, I would now be getting 0.9V idle, and 1.3V load. On the other hand, if I set it to +0.1V, I would now be getting 1.1V idle, and 1.5V load.

Essentially, by default your voltage fluctuates within a range. Offset will undervolt that entire range (if you go with a negative value), or overvolt that entire range (if you go with a positive value). Manual basically gets rid of the range altogether.

Testing

I used the Intel Extreme Tuning utility, as well as CPU-Z.

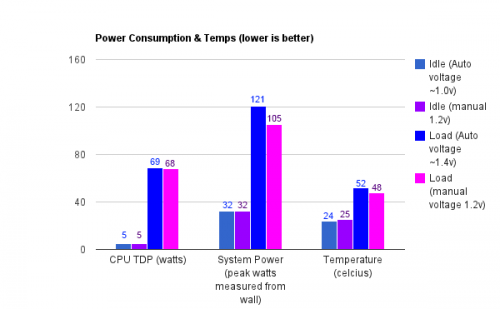

I’ll start with a comparison chart, because it’s less reading for you.

Update: after some further testing, the middle graph below (system power consumption from the wall), is not completely accurate. I couldn’t find the Kill-a-Watt meter, and instead used the output reported by the UPS I was using. Unfortunately, the UPS seems to “round” to the nearest 8watt value. Thus, the power consumption values have an inaccuracy of +/- 4 watts (assuming it’s at least rounding properly). The other 2 graphs (left/right) should be accurate.

Because throwing this all in 1 chart (instead of splitting it nicely into a few) doesn’t exactly fall into the category of “Matt’s best time-saving ideas ever“, a little clarification to make it easier to read…

Blue bars represent “auto” (default) voltage – the stuff that fluctuates with the load from 1.0v-1.4v.

Purpleish bars represent the fixed (manual) 1.2v setting.

In each set, the first 2 bars are at idle, and the last 2 bars are at load.

Ok, so what do we notice?

- At idle, there’s almost no difference between 1.0v and 1.2v.

-CPU’s TDP is a low 5/5 watts.

-Total system power draw is the same at 32/32 watts (*inaccurate).

-There is 1 degree difference in temperatures. 24/25. - At load, there’s a rather significant difference. Having the load voltage at a fixed 1.2 v instead of letting it auto-up to 1.4 v shaves off 4 degrees load temp, and shaves off 16 watts (* +/- ~8 watts) of system power. However, the processor’s TDP does not go down much (only by 1).

Basically, there are gains to be had by lowering voltage at load. There aren’t really gains to be had by lowering voltage at idle.

Applying the findings to the “offset vs manual” question

We went from load voltage of 1.4 down to 1.2 by using “manual” voltage. To do this via the “offset” voltage, we would have to use an offset of -0.2V.

However, the problem is that an “offset” of -0.2V would also lower the idle voltage by the same amount. Remember, the default is 1.0 volts, so by changing the offset to -0.2, we’d have an idle of 0.8 volts.

..and 0.8 volts is getting so low that the system may not boot.

Conclusion:

Offset is commonly seen as the “recommended” method for overvolting (for overclocking), and it makes sense as to why – if you need 1.5v to handle your overclock at load, you probably don’t need that much at idle. Offset in that case might still bring up your idle voltage, but not nearly as much as if you just used a fixed “manual” value.

However, when undervolting, we have an entirely separate issue – if we use “offset”, it’s possible to push the idle voltage too-far down. And as we saw in the charts, there isn’t really any advantage to lowering the idle voltage anyway – the substantial benefits are from the reduction in load voltage.

So… manual voltage appears to be the best way to under-volt. That said, remember that all processors differ. You may want to test your own CPU. For example, if…

- …your default idle voltage is quite high…

- …the default “spread” between your idle/load voltages is small….

- …you’re only undervolting a slight amount….

…then offset might make more sense for you. For example, if your default idle/load voltages were 1.2/1.3, you probably wouldn’t have to worry about the idle voltage getting too low by using offset, since you’ll probably run into voltage problems at load long before dropping idle below 1.0.

—

Other tidbits

Since I’d collected a good bit of data during the process, I’ll leave you with the following:

- I continued dropping the voltage (manual) below 1.2 v, in small increments.

- While the load temperature had been 48 degrees at 1.20 v, by the time I hit 1.14 v, the load temperature was down to 45 degrees. However, the CPU TDP as reported by Intel’s tool did not change (still peaked at 68 watts each test).

- I’d used a UPS to measure power drawn by the computer via it’s display. Unfortunately, I’m not sure of the accuracy – while the “idle” wattage was completely stable at 32 watts, the load wattage actually fluctuated quite a bit, usually between 3 values (for example, 81/89/97). As I slowly dropped the Vcore, at load it still fluctuated between those numbers, but started “favoring” the lower values. I may have to run the tests again with the kill-a-watt meter instead, just in case a worst-case-scenario is happening (maybe the UPS has got a fixed set of numbers it displays and simply rounds to the nearest one).

The most tangible benefit seems to be the temperature decrease – by dropping from the default load of ~1.4 v down to 1.2 v, I’d shaved off 4 degrees. Then, another 3 degrees by dropping further to 1.14 v.

Cheers.