One of my mini ITX motherboards is limited to a single PCI Express x1 slot. For a few months I was running on the common 4-port Marvell 9215 card that can be found at all the major vendors. However, I was in the situation where I needed to add a few more hard drives.

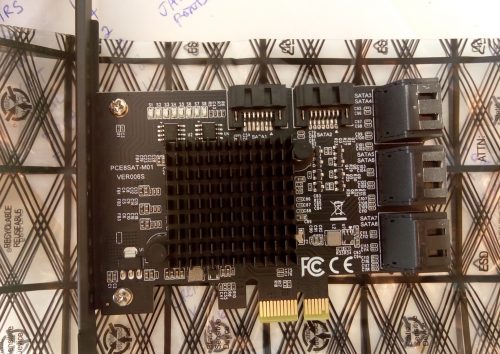

After a bit of looking, I came across the 8-port cards that are advertised as having a “Marvell 88SE9215 + JMicron JMB5xx” chip. These cards happened to be pretty low cost, so I picked one up. The model number printed on the card was PCE8SAT-M01 VER0065. I’ll start with a quick look at the front and back.

Incidentally, the 8 little LEDs seen on the front: each corresponds to a port and is on when a card is connected, blinking when there’s data transfer. They’re an obnoxiously bright blue.

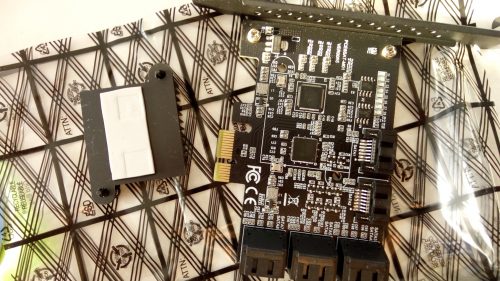

The first thing I did was pull the heatsink to get a look at the chips. My suspicion was that the Marvell 9215 was being used as the main HBA and that the “JMB5xx” was going to be the JMicron JMB575 port multiplier.

Yep! On the left the JMicron JMB575 and on the right the Marvell 88SE9215.

If you check out the dismounted heatsink above, you’ll see a thermal pad. The bad news is that you can’t replace the pad with thermal paste: other chips would short against the heatsink if the pad were removed. The good news is that the max temperature I saw during my test runs was about 37C. The heatsink is well-sized and I don’t foresee any temperature issues.

Power Consumption

As this was intended for a system that runs 24/7, I was curious as to the operating cost. In other places I’d seen the Marvel 9215 listed as being in the 2W region and was hoping the port multiplier wouldn’t balloon that figure.

I tested system consumption from the wall with and without the card installed. No hard drives, just startup into the BIOS.

I saw a 2-3W increase with the card installed. Definitely reasonable.

Throughput performance/benchmarking (basic)

One AliExpress seller suggested that “The fastest speed is 380-450m/s in PCI-E 2.0. If it is plugged into PCI-E1.0, the speed only runs 200-300m/s”, and also went on to mention that if ports are used at the same time the speed will slow down.

To get a rough throughput test, I used “dd” to read from the drives and spit the output to /dev/null . This is essentially a sequential read test without any real filesystem overhead. Block size was set at 4MB.

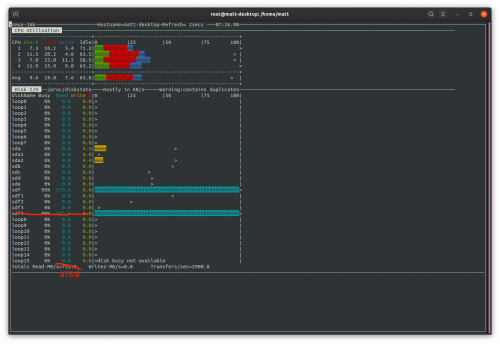

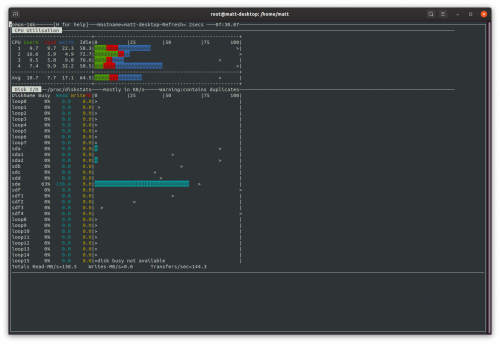

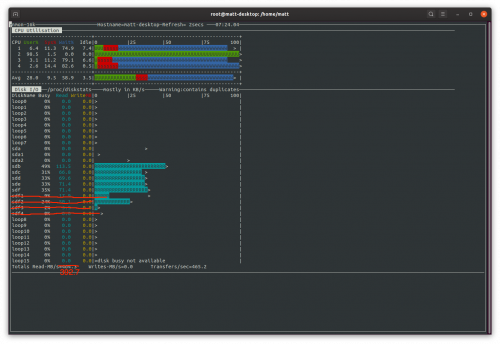

I monitored the speeds using “nmon” and took random screenshots during each test. Thus, results aren’t likely to be the absolute peak, nor are they likely to be the absolute bottom.

Test 1: A single SSD

This was an older SSD and I don’t know what it’s max throughput was. The result was 375MB/s.

Test 2: A single HDD

This test was essentially is limited by the throughput of the drive – 130.4MB/s at the time of the screenshot.

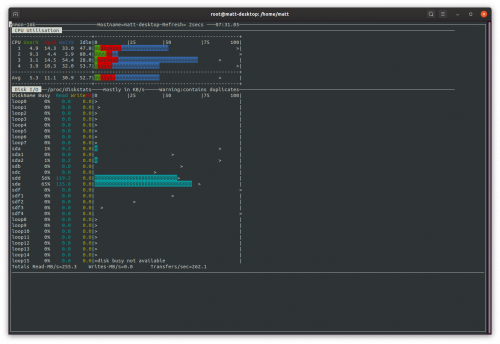

Test 3: Two hard drives

With 2 drives, we hit 255.3 MB/s total – still limited by the hard drive throughput.

Test 4: Three hard drives

I suspect that the combined total of 296.0 MB/s was due to the 3rd drive hitting a bit of an output slump, as total output improves in the next test.

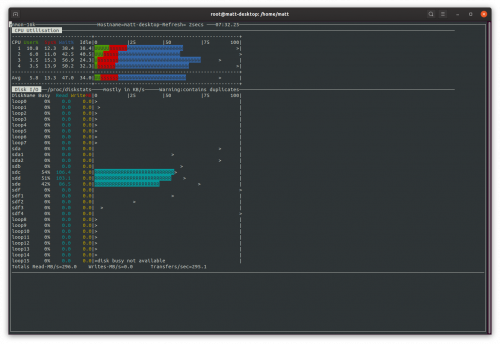

Test 5: Four hard drives

A total of 382.5 MB/s. Yes, it took 4 spinning rust drives to hit the speed of our single SSD from the first test.

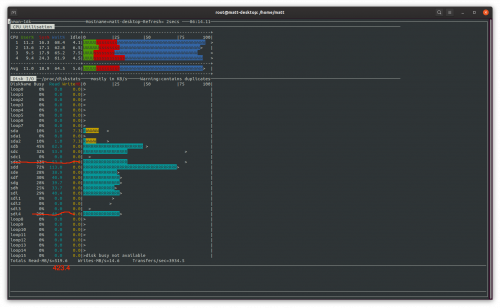

Speaking of that single SSD… why not combine it with the hard drives…?

Test 6: Single SSD plus 4 hard drives

Here we hit 392.7 MB/s. It’s starting to become clear that the typical throughput we can expect from this SATA controller is just shy of 400MB/s. Thats pretty fair seeings how PCI Express 2.0 x1 has a max theoretical bandwidth of 500MB/s.

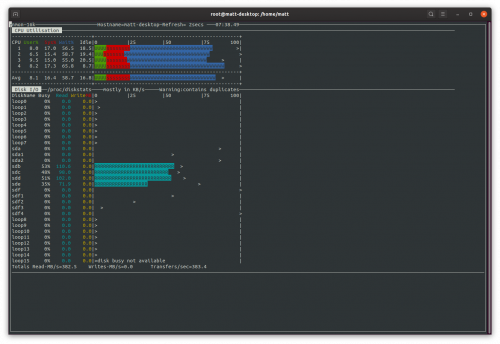

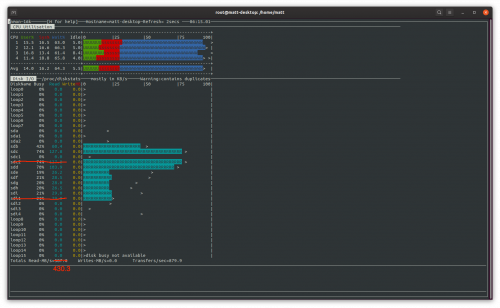

Test 7: Putting a drive on all 8 ports

For this test I scrounged up a number of other drives. I took the OS drive off the card and put it onto a motherboard port. I also started the system with “mitigations=off” and dropped to a 1MB block size. We still have our SSD plus 7 other drives.

423.4 and 430.3 MB/s.

Definitely a good showing.

Extra Bits and Conclusion

I checked a few other items just to verify they were working. I’ll phrase these as questions, since these were things I was wondering while waiting for the card to arrive.

Does the card support FBS?

Yes, this card does support FBS (FIS-Based Switching). “dmesg | grep ACHI” and/or “dmseg | grep FBS” spit out “FBS is enabled” and “FBS is disabled” numerous times during boot, ending with it being enabled. I actually wrote to both a good and bad drive simultaneously and when the bad drive hung, the good drive kept motoring along while the OS repeatedly reset the link and eventually dropped the bad drive – I suspect with CBS it may have hung up on both drives.

FBS support was somewhat expected, as both the Marvell 88SE9215 and JMicron JMB575 claimed to support FBS.

Does the card require drivers in Linux?

Nope. Plugged the card in, started the system, and drives simply showed up. Note that I used Ubuntu 19.10. There was an included driver disk (I suspect for Windows): I wouldn’t be surprised if there were a process you needed to follow to get it working there.

Can you put the boot drive on a port?

2 of the ports (#2 and #3) worked for the boot drive, the others did not. Note that having this card installed did cause the BIOS to take longer to finish the POST.

Note that cards with port multipliers are notorious for needing drives plugged into specific ports: I didn’t run into that issue but I didn’t test various combinations either so it’s possible you may need to tinker some.

Does SMART work on drives through the port multiplier?

Yep. I ran “smartctl -a” against all 8 devices after the final test. Everything showed up.

—

Conclusion

Overall I’m quite pleased with the card thus far.

- Quite a bit cheaper than other 8-port options.

- Works on a PCI Express x1 lane.

- All 8 ports worked.

- FBS worked.

- Power consumption was reasonably low (2-3W).

- Decent-sized heatsink, didn’t get too warm (37 degrees C).

- Could boot off a couple ports.

- No hiccups during the testing, no crazy errors in the system log after the tests.

I’ll update if I run into issues down the line. However, for the time being my thought would be that if you need 8 more SATA ports and only have a PCIE x1 slot open, this card is probably going to be tough to beat.

I dug it up as there are several bad amazon reviews stating bad cards, bad quality (like the one where the a few cards arrived with soldered-together connectors causing a 8TB drive to die) and weird issues like "drives disappearing" and others (like one stating a random 100MB partition appeared - but I guess this seem to be a faulty drive as an edit says this persisted even after replacing the card).

So, for me there seem nothing wrong with using a 4-port HBA and "blow it up" to 8 ports by using a 1-to-5 expander on one of the 4 main ports - but does it really work that simple? As this would mean 3 ports are dedicated full bandwidth - and the other 5 are bottlenecked cause they share just one uplink expanded to 5 ports via switching - which results in each of those 5 ports only really have about 20% of the bandwidth available. Can you confirm this? Or is this just me not really understanding S-ATA expanding?

Would like to get a reply, maybe even via e-mail. Thanks in advance.

another Matt

As for the port multiplier itself, there are 2 common methods in use to actually multiply out the ports - CBS and FBS. CBS (command-based switching) is the lesser of the 2 - it can not communicate with all disks at once, it needs to wait for a disk to respond before issuing a command to another disk, and if a drive hangs there's a high probability that all disks will be unreachable until it sorts itself out. FBS (frame-based switching) on the other hand can juggle commands between disks somewhat simultaneously and performance should be higher. In both situations you're capped by the speed of the SATA port which feeds the port multiplier, so yes, all 5 drives in your example are divvying up the 1 port and are "bottlenecked".

As to reliability... the fact that basic HBA's "just work" (mostly) without corrupting hard drives on a regular basis is somewhat impressive on it's own. Adding the complexity of a port multiplier is really adding some additional risk. If reliability is the primary concern, I'd generally go with multiple HBAs and try to sort drives via RAID (or better: using backups) in such a way that an HBA going haywire won't kill all the data. Trying to do the same with port multipliers thrown in the mix.... you *really* want to ensure you've got backups for any important data.

As for sata/sas port multipliers: Yea, I read it up on wikipedia right after I posted my comment, but thank you for your effort anyway.

I'm currently in a situation where I would had to somehow slip in a linux vm layer and a 2nd gpu for it if I would try to stay on windows instead of migrate to linux as windows only support JBOD, RAID0 and RAID1 out of the box (and the RAID5 is locked away for server versions only). As I wasn't able to find any reliable software driver to set up a software raid on windows I'm either stuck to my current setup - or maybe would have to migrate to linux - which would require at least a 2nd cheap gpu for the linux system so my current beefy one would be free for vm passthrough.

I guess, if I build a new system, I should take your advice to use multiple non-"expanded" HBAs and just build some sort of software raid based on linux mdadm.

Last two images show how the card is interconnected:

It has PCIe 2.0 x1 lane ("up to" 500MB/s ) split by Marvell 88SE9215 HBA between 4 SATA ports (~125MB/s each). One of those SATA ports is further split by JMicron JMB575 between 5x SATA ports (~25MB/s each).Optimal number of spinning HDD would be 4x with ~125MB/s per each. More drives would be hitting the bottleneck of the PCIe 2.0 x1.

https://www.sybausa.com/index.php?route=product/product&path=64_181_85&product_id=156

OR

from EVGA Motherboard Download center: https://www.evga.com/support/download/

choose "Motherboard" tab > Family: "Intel Z87" > Part Number: "EVGA Z87 FTW..."> Type: "SATA 3/6G (Non-Intel)"

Windows8.1 Drivers are working fine with win10

I bought it from a vendor who wrote that it is a RAID controller card, but I can't configure the card as a RAID.

Maybe I was cheated?

Thanks

To optimize my two RAID5 setups, I traced the board connectors to figure out which ports to to the ASM1064 and which ones to the JMB575. Turns out: Ports 1, 2 and 4 are connected to the ASM1064. For the stacked connectors, the upper connectors are ports 3,5 and 7, and the lower connectors are port 4,6 and 8 respectively. Final setup in my case were mainboard SATA, port 1 and port 3 for one RAID5 and ports 2,4 and 5 for the other raid 5, as it is unlikely that both RAID5 experience high I/O at a time, in which the port multiplying penalty is acceptable.